Scope of the Problem

Click on the above video and try to analyze the accident that occurred. What do you think happened? Could this have been prevented? For this lab, I am going to provide a

brief analysis of factors that lead up to this incident and then provide recommendations on what could be done differently in the future.

Background

On Tuesday March 26th, 2019 at approximately 10:30am, our class was informed that we will fly a quick mission at Martel Forest using the Yuneec H520 Platform. To help us understand what the location looked like, we were provided a satellite image as seen in Figure 1.

|

| Figure 1: Martel Forest |

At approximately 11:00am, our class arrived at Martel Forest. According to the local KLAF METAR, wind was coming from the northeast at 7 mph, skies were clear with 10 sm visibility and the temperature was 40 degrees. We used the KLAF METAR because the operating area of the UAS was just outside the KLAF class D airspace and we therefore considered it as an accurate source of information. After splitting up into teams, we were then instructed to walk around the forest and note any obstructions. It is estimated that the trees were roughly 40 meters high as seen in Figure 2.

|

| Figure 2: Ground View of Forest |

After consulting the B4UFLY mobile app, the class confirmed were in an area with minimal hazards and had two pilots set up the UAS, a team of visual observers, and a ground control crew. For the purpose of this mission, I was a Visual Observer that took field notes of the operation and was allowed to video record the flight since there were several VOs present as well. In figure 3, are the ground control points case in the bottom left, and the Yuneec H520 UAS case on the tale gate of the truck.

|

| Figure 3: Ground Control Points and UAS Case |

In Figure 4, is an image of myself taking notes of the UAS transmitter while cross referencing information on to my iPad. In the background is the ground control crew going to deploy the GCPs. Of the 15 of us that were on the sight of the mission, 10 of us (including myself) had our Part 107 licences.

|

Figure 4: Myself, taking notes and the Ground Control Crew

|

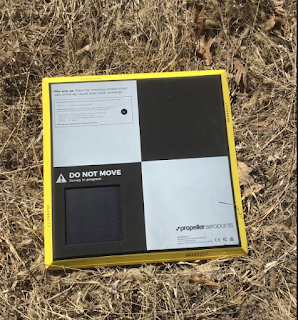

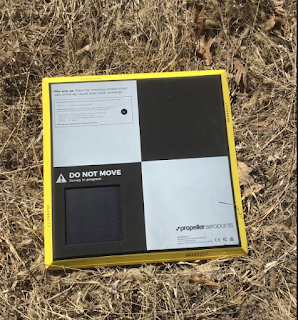

In figure 5, are the the deployed Ground Control Points. For the purpose of this mission we used the Propeller Aeropoints. Construed of high density foam, these GCPs are rugged, relatively lightweight, and have an in-built GPS. In addition, they are solar powered, have a large memory and offer centimeter accuracy. In as little as 45 minutes they can find where they are in relation to themselves and be used by any GPS-enabled UAS.

|

| Figure 5: Uncased GCPs |

In Figure 6 is the Yuneec H520 ST16S Personal Ground Station Controller powered by Intel. During the calibration, the transmitter displayed step by step diagrams on how to position the UAV. Something that I found was strange is the calibration included the rotors in the diagram. Although calibrating with the rotors on can increase the the likelihood of a smoother autonomous flight, any malfunction during the calibration phase could greatly increase the likelihood of injury.

|

| Figure 6: Yuneec Transmitter Callibration |

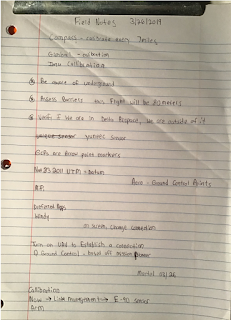

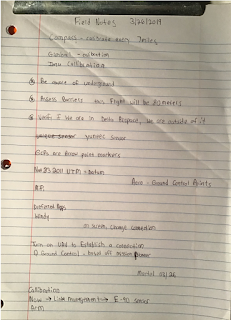

In Figure 7, are my handwritten field notes for this mission. Although the majority of the rest of my notes were stored on my iPad, I believe that it is essential to have have handwritten notes because unlike an Ipad, you don't have to deal with a constant glare from the sun, you don't have to worry about battery life, and you don't have to worry about the storage being unintentionally deleted.

|

| Figure 7: Handwritten Field Notes |

At approximately 11:30am the UAV took off climbed to an altitude and fell out of the sky. In figure 8 is a close up of the damage it suffered. As you can see, at least 3 arms are significantly damaged, and most of the motors twisted from the propellers. In any UAS crash people must evaluate the circumstances of the damage and consider whether or not the crash has to be reported. Given the fact there was no collision with another aircraft, no one was injured, and no property damage occurred. This crash was omitted from being reported to both the FAA and NTSB.

|

| Figure 8:UAV Crash Site |

What Went Wrong?

Despite having a team of roughly 15 UAS students and two people that have used this UAS system regularly, we believe the cause of the accident was a combination of poor crew resource management and unclear manufacturing instructions. As seen in Figure 8, which is a screenshot in 22 seconds of the video, the UAV is working properly. In figure 9, one second later, the UAV abruptly stops working and completely shuts off as it falls to the ground.

|

| Figure 8: 22 Seconds |

|

| Figure 9: 23 Seconds |

Results

After reviewing the video several times and discussing the topic of crew resource management with the pilots and the rest of the class, it is believed that the UAV suffered a catastrophic malfunction during its transition to autopilot mode. As we traced back the to the events while setting up the UAS, it is believed that a poor installation of the battery ultimately lead up to the UAVs malfunction. Due to the fact that the UAV abruptly fell out of the sky after slightly maneuvering to fix itself on a way-point, we speculate that the battery must have somehow dis-attached itself during that shift. Since the dis-attachment was not a result of flying in inclement conditions, it is possible that someone improperly installed the battery during pre-flight procedures.

In addition, the design of the Yuneec has a very odd battery placement function which can confuse UAV pilots who work on multiple platforms regularly. For example, in a DJI platform, when the battery clicks into the UAV, one does not have to question whether or not the battery is secure because the clicking sound confirms that the battery will not be dislodged during UAV flight. In the Case of the Yuneec, the battery did click when placed into the UAV, but the clicking sound did not guarantee that the battery was secure. Due to the false assumption that a clicking sound guarantees the security of the UAV and due to poor crew resource management, the Yuneec H520 fell out of the sky. In the next Blog post, I have created an overview of crew resource management as it applies to UAS.