|

| Looking at LiDAR Point Cloud Collected by L1 and Generated in DJI Terra |

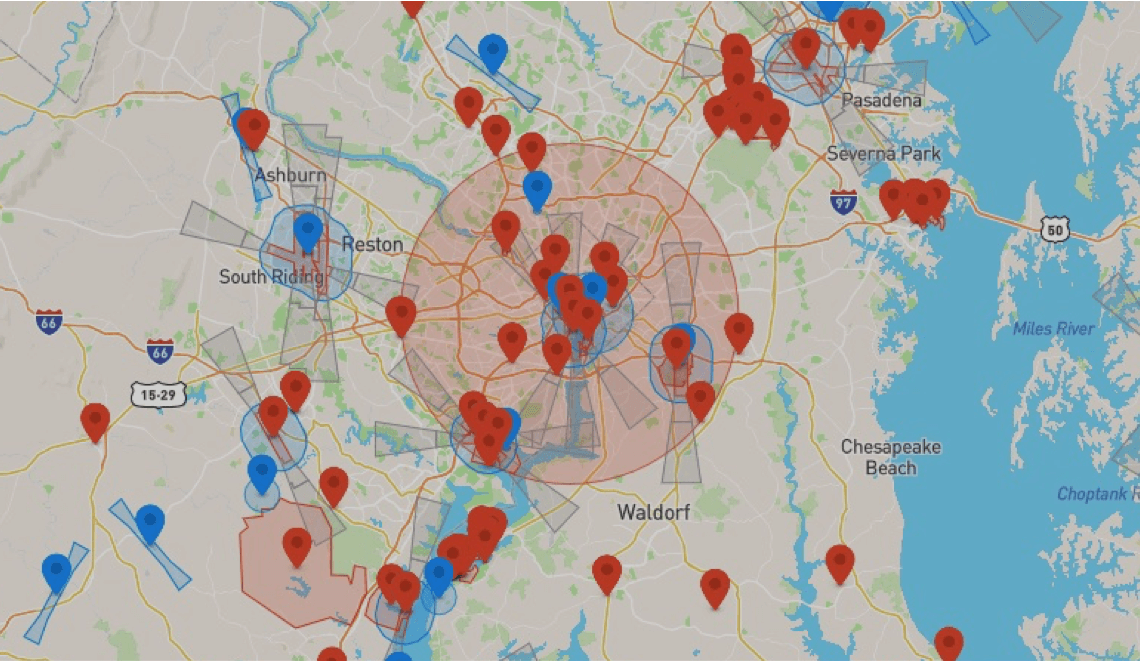

Overview

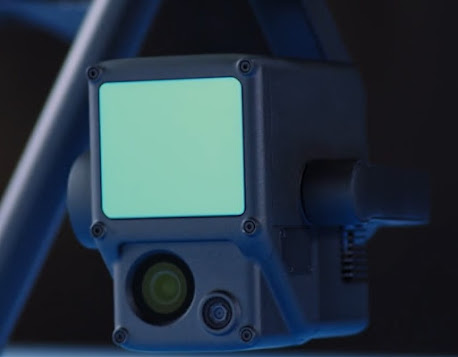

Last year, I had the chance to rent a DJI Matrice 300 RTK and test its L1 LiDAR sensor (Figure 1). The L1 is DJI's first commercial LiDAR system, and its affordability caught my attention. Although I'd love to operate a $100,000+ cm survey-grade accurate LiDAR system, my current resources don't allow me to afford such an expensive system. Additionally, renting a survey-grade LiDAR is significantly pricier than renting an entry-level LiDAR.

|

| Figure 1: DJI L1 |

The point clouds generated by this system were impressive, particularly in areas with dense vegetation. However, visually pleasing point clouds are useless if we can't use them! This post aims to share my experiences with the L1, helping you better understand what it takes to make entry-level LiDAR work..

What is Entry Level LiDAR?

There is no definition of entry level LiDAR but I have classified the L1 an entry level LiDAR system because in my opinion, although it is not a survey grade LiDAR system, many people will purchase it as their first LiDAR UAS due to it’s simplicity, availability and affordability. Is it possible to obtain survey grade data using the L1? Since I am not a licensed surveyor, I shouldn’t answer that question, however I believe so.

Nevertheless, I do not think the L1 will provide survey grade results in an accurate and repeatable way unless you are extremely careful in selecting the correct settings, planning the correct missions, and correctly integrating traditional survey data to the correct software. Here is a question for you, are the $150,000 survey grade LiDAR UAS user friendly? I assume user friendly enough to stay in business, but I ask this because many seem to require hardware and software training.

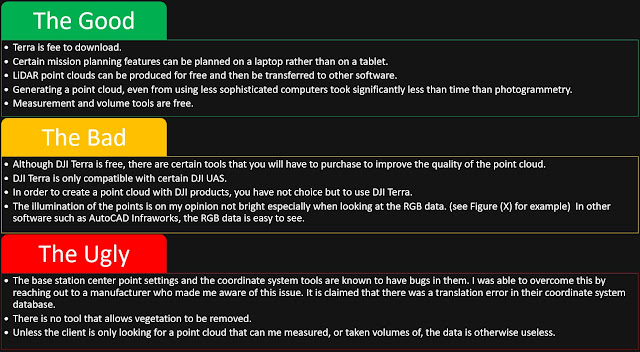

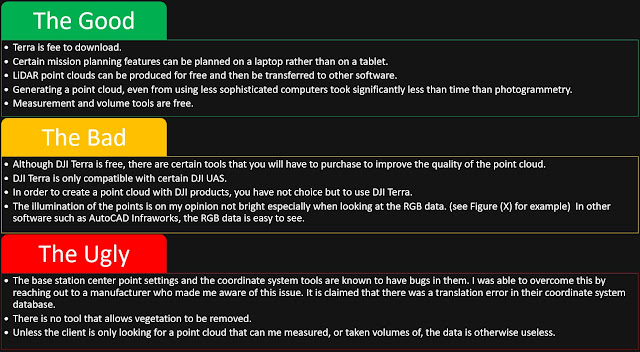

DJI Terra the Good, the Bad, the Ugly

Referencing DJI’s website, DJI Terra is a PC application software that mainly relies on 2D orthophotos and 3D model reconstruction, with functions such as 2D multispectral reconstruction, LiDAR point cloud processing, and detailed inspection missions. Released in 2020, DJI Terra is an alternative to other photogrammetry platforms such a Pix4d, Drone Deploy, Context Capture etc.. Figure 2 is a link to DJI Terra, while Figure 3 details items that I think are good, bad and ugly regarding DJI Terra and it's capabilities.

|

| Figure 2: Link to DJI Terra |

|

| Figure 3: DJI Terra- The Good, The Bad, and The Ugly |

Visualization and Entry Level Tools

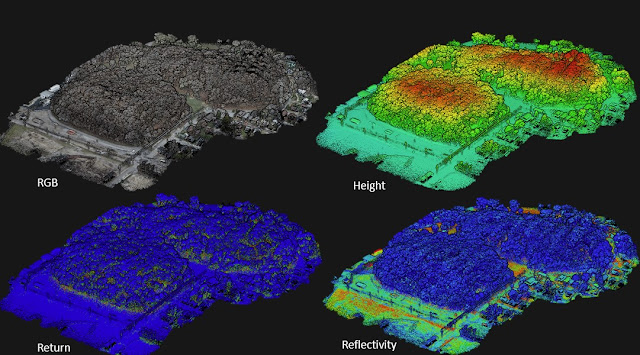

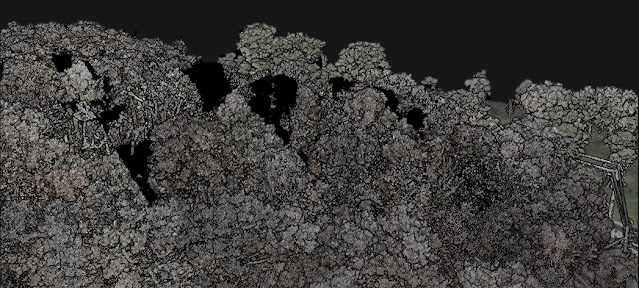

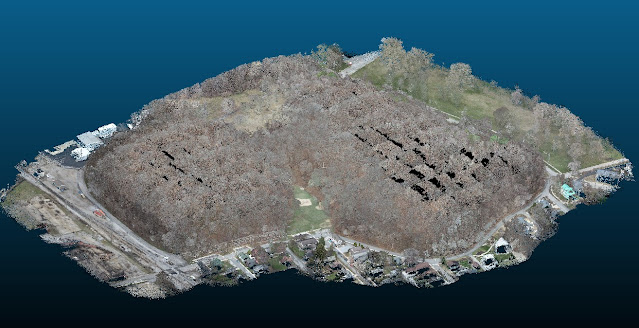

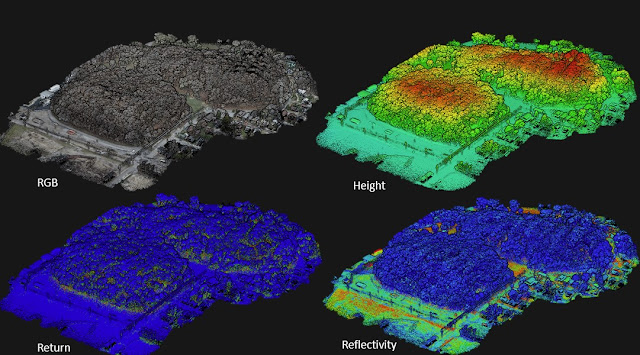

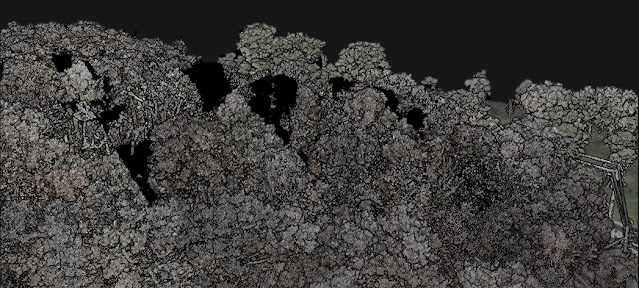

As seen in Figure 4, DJI Terra displays points by RGB values, Height, Return, and Reflectivity. Once populated, the user can zoom into features of interest. One of my first concerns while viewing the RGB settings was the occasional dark spots along a tree line (Figure 5).

|

Figure 4: Point Displays in DJI Terra

|

|

Figure 5: Spots where Photogrammetry Colorization was Missed

|

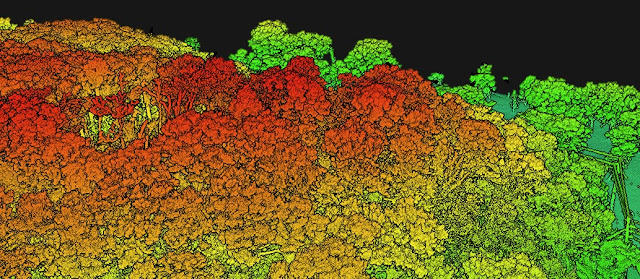

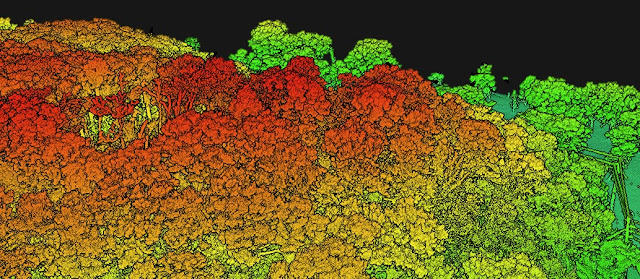

The dark spots are areas missed by the connected photogrammetry camera within the L1 unit. If this was a photogrammetry point cloud, the dark spots would represent missing data. Since the purpose of photogrammetry in this case is to colorize the LiDAR point cloud rather than construct a surface no actual "holes" were noted as confirmed in Figure 6. However, missing colors could be a major disadvantage if you rely on a colored point cloud to identify features.

|

Figure 6: Points Classified by Elevation Have no Dark Spots

|

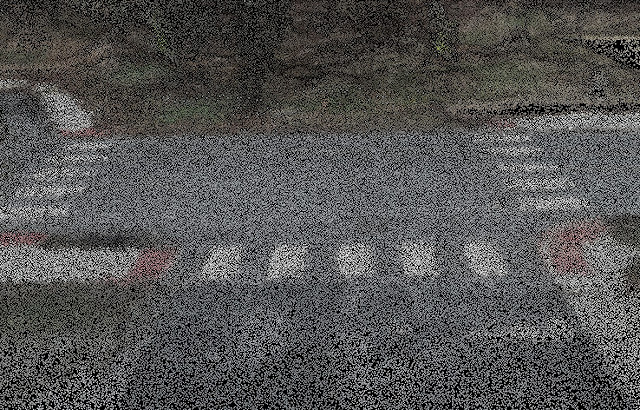

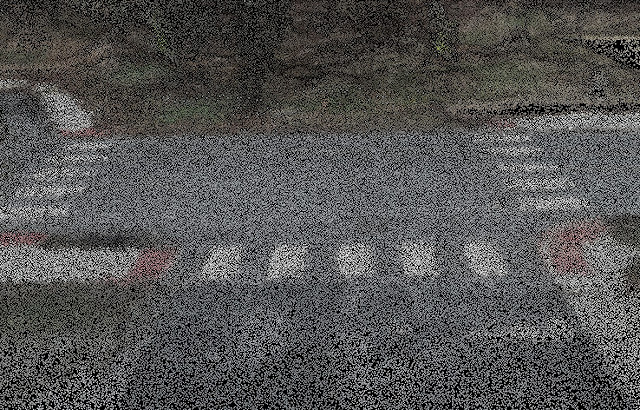

In Figure 7 larger pavement markings could be identified, but items such as curb boundaries and ground lines are difficult if not impossible to identify. If you need to make break lines in your projects, it is likely your data from the L1 will not help you. Aside from basic measurement tools, coordinate system tools, and a volume tool, more can be done with the L1 data if you have access to other data analysis software.

|

| Figure 7: Looking at Pavement Markings |

How DJI Terra Compares to Other Software

Pun not intended; Cloud Compare is a free open-sourced point cloud processing software which can help analyze data from the L1 significantly more than DJI Terra., Below are functions I have been able to achieve using cloud compare:

• Reducing the file size of the point cloud

• Trimming excess data from the point cloud

• Generating surfaces

• Generating contours

• Removing vegetation

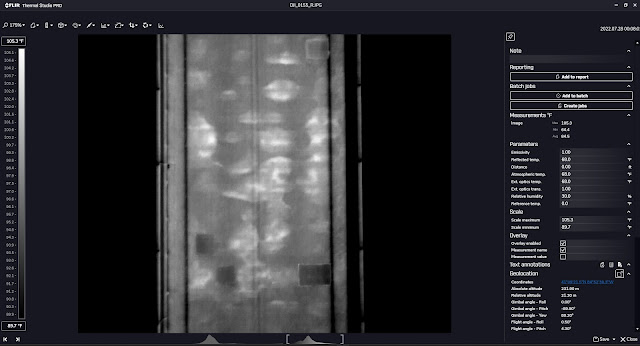

In Figure 8, the L1 data is shown in Cloud Compare. One immediate observation is that the RGB display in Cloud Compare appears brighter and clearer than in DJI Terra's interface. Despite this advantage, however, the RGB point display from the L1 still presents challenges when it comes to identifying objects.

|

| Figure 8: L1 Data Using Cloud Compare |

Data Analysis

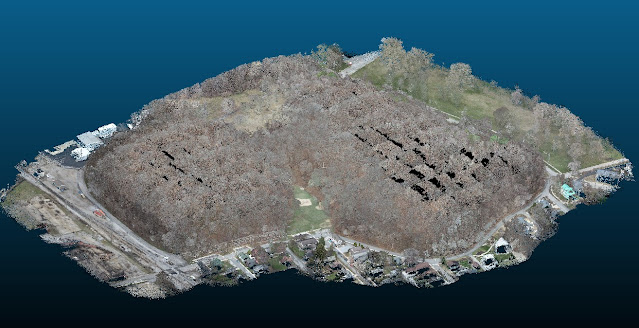

To assess the quality of LiDAR data, one useful approach is to check for any gaps or holes that may be hidden beneath the vegetation. Although I cannot disclose all the intricacies of LiDAR data analysis, my workflow allows me to eliminate vegetation without discarding the essential data required for surface generation. In Figure 9, you can see the point cloud with the vegetation removed and the ground points color-coded by height. For a closer look at the vegetation removal, please refer to Figure 10.

|

| Figure 9: Vegetation Removal Using Cloud Compare |

|

Figure 10: Looking at Vegetation Removal

|

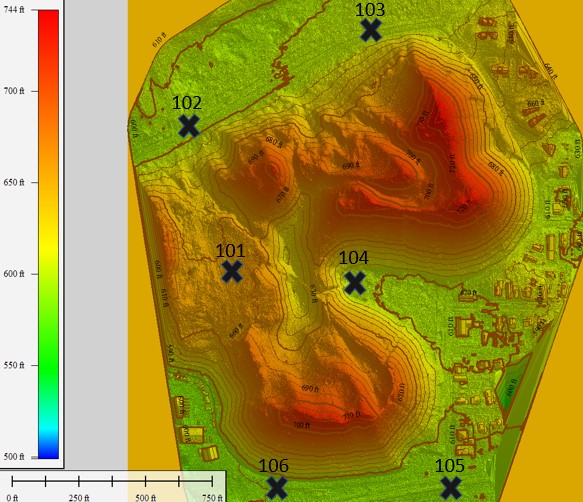

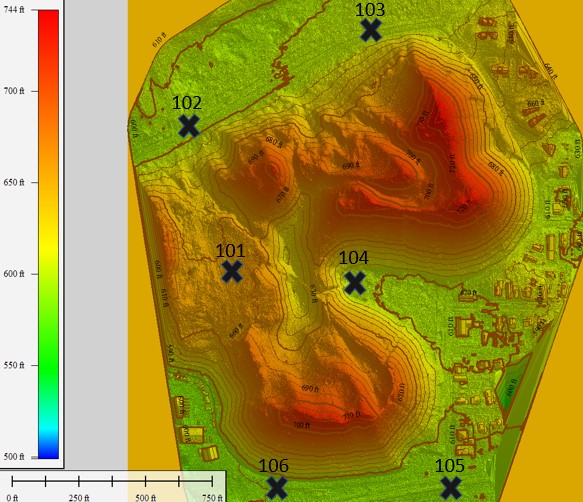

In Figure 11, the data from the L1 is presented as a surface with contour lines. It is important to note that while the surface was checked against survey grade control points and had an RMSE error of approximately 1 inch, this does not mean that the entire dataset is off by one inch. Due to the hazardous terrain, dense vegetation, and budget constraints for the project, it was not possible to survey the entire site.

However, despite these logistical limitations, the data obtained was still deemed "good enough" for preliminary design purposes and provided valuable insights into the topography of the site beneath the vegetation. After transforming the point cloud into a surface, the extracted contours were overlaid onto the surface and integrated into CAD.

It should be noted that Figure 11 is not a map and does not represent the final integrated data in CAD. The CAD and UAS data integration process is a topic that requires an entirely separate blog post, and therefore, we will not be discussing it further in this article.

|

| Figure 11: Example Surface Before CAD Integration |

Conclusion

Entry-level LiDAR systems, such as the L1 sensor, can provide value to projects that require a moderate level of absolute accuracy, typically within 1-2 inches. However, claims of centimeter-level accuracy must be scrutinized to determine whether they refer to relative or absolute accuracy. It is worth noting that the L1 sensor may not be suitable for projects that demand detailed feature extraction due to its suboptimal point cloud colorization. While it is possible to improve the colorization by integrating the L1 point cloud with an orthomosaic created by a higher-quality camera, such as the P1, this approach may necessitate additional fieldwork, which comes with its limitations.

Several key takeaways emerged from a project that employed an L1 sensor in a fairly dense forest:

- The LiDAR system was capable of covering areas beneath the vegetation, which is significant.

- A 30-minute flight time could cover approximately 60 acres, which is impressive.

- Generating a LiDAR point cloud was surprisingly fast.

- Vehicle movement caused significant noise in the point cloud, which is something to consider when planning a LiDAR project.

- To get the most out of LiDAR hardware, software beyond DJI Terra will be necessary.

- When viewing profiles from the hard surface, L1 point clouds will exhibit a "fuzz" due to the limitations of the hardware.

- Photogrammetry with survey-grade ground control points (GCPs) appears to work better on hard surfaces than LiDAR.

- If survey-grade LiDAR deliverables are required, the investment may be around $500,000.

Overall, the L1 sensor is a suitable entry-level LiDAR system for projects that require moderate absolute accuracy and involve non-detailed feature extraction. However, it is essential to keep in mind the limitations and challenges that come with using this system, such as the fuzziness of point clouds and the impact of vehicle movement. Proper planning and investment in high-quality hardware, software, and GCPs are critical to ensuring successful LiDAR projects that meet specific requirements.

.png)